What makes good software? Separation of concerns has got to be up there in a big way, ensuring you have relevant tiers to your application that deal with a particular set of concerns, but what does an enterprise software stack look like? I've been asked this question a number of times, so thought I would put up one of the ways in which I write software for SOA environments.

To avoid being short down in flames, let me be clear, I'm not advocating any particular dogmatic approach here, just presenting one way that's worked for me in several situations in service oriented environments.

So, here's the simplified picture of the stack:

Database

At the very top of the stack we have the database or persistence engine - the place where our application is going to store it's data. This doesn't have to be a SQL database, but is more a concept of a place to store information. To this end this box could be satisfied by XML, an object database, text files, other external services and so on - indeed there may even be multiple boxes.

The Data Abstraction Layer

This layer is responsible for hooking repositories up to persistence. It should expose an engine flexible enough to work with a variety of types of physical repositories in a natural manner. Generally you won't write your own data abstraction layer, but will instead re-use one of many different technologies already available such as NHibernate, LINQ to Entities, LINQ to SQL and so on.

Repositories

The repositories are responsible for fulfilling requests to obtain and modify data. This allows a further level of abstraction that describes the purpose of the code rather than the implementation. IE: A service will ask a repository to "SelectAllCustomers" rather than directly execute some LINQ query.

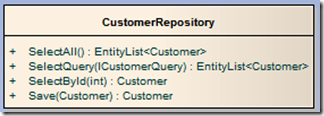

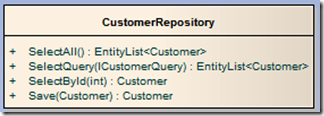

Repositories deal in one thing and one thing only - Domain entities. Their inputs and outputs are usually one or more entity objects from the domain (see below). For example, suppose you were writing a pet shop application, you may have a repository for dealing with customers as follows;

As you can see we have methods for retrieving customers and for updating them. This makes working with customers extremely clear and self describing. The first method - SelectAll() will simply return all of the customers in the system (as customer Domain objects). SelectQuery will allow the description of how to get data, sort it and present it.... eg, using the CustomerQuery, one might be able to specify the sort order and direction, filters on fields, along with which rows to return for pagination.

Each type of conceptual data would have it's own repository in the stack.

(One alternative to using repositories is the active record pattern where entities expose methods and functions similar to those exposed from repositories)

Domain Entities

The domain model (in this instance) is a representation of the various entities that make up the problem you are describing along with their relationships and any operational logic (business rules).

The following is an example of a simple domain model, working again with the fictitious pet shop example.

In the diagram above we can see we have a customer entity, which has attributes describing the customer. It also contains a collection of Order entities representing the orders that this customer has made. Each order must have a customer, but a customer can have 0 or more orders.

Where an order exists, this will have attributes of it's own to describe the order, along with a collection of order lines (0 or more). Each order must have one and only one customer.

Moving down the graph, the order line will know which order it belongs to and also reference a product that the line of the order corresponds to. Each order line can only be within one order and it must also reference a product.

As you can see, the domain model is just the object graph of the entities it represents. There may of course be more meat on the bones of your real life domain model, including operations on entities to implement business rules and such like.

So, how does the repository return all of this information - where we simply invoke SelectById(10) to get the customer entity with an ID of 10? Well, the answer is actually in the DAL and repository layer.

NHibernate and other OR/M technologies allow for something called Lazy loading - where the initial query loads the Customer object, but then wraps it's properties (called proxies) so that when they are invoked, it actually automatically goes back to the database to get the entities required.

A second alternative is to describe how deep you want the graph to load in the repository implementation (or even make this a factor of the query parameter to allow control further down the stack). Again, most OR/M's allow you to specify what you want to load from the graph - such as specifying to load the orders and order lines for a customer at the same time as it gets the customer. This usually offers some performance gains too as only one query is executed. (And in fact is your only option if you are using LINQ to entities as of V1, which doesn't support lazy loading).

Regardless, your DAL or your repositories should be able to hydrate an object graph based on a request from further down the stack, and should also ensure that when you do get an object from the database, it only get's one instance of it!! (IBATIS for example doesn't do this by default and you must implement your own Identity Mapper pattern that is used by repositories).

Service implementations

These are the actual end-points of your service - the ASMX you call, or in the case of WCF (my preference), the endpoints you've defined and implemented through service contracts.

The service is responsible for taking an in-bound request object, working out what to do next, then invoke the appropriate repository methods to get or affect data before then assembling a response back to the caller.

In other words, the service is invoked using a data contract in the form of a data transfer object (see below), it then, if necessary hydrates a domain object graph ready for use by repositories before invoking them and getting back domain objects. When it does, it uses assemblers (see below) to convert the full data representation from the domain into a structure of data that the client application is actually interested in (DTOs).

Data Transfer Objects - DTOs

The purpose of DTOs is to represent the data needed to complete an activity in it's most minimal form for transmission over the wire. For example, where a client application is interested in customers, but is only interested in the customer's name and ID, but not the other 20 fields, your DTO would only represent customers as name and ID. They are lightweight representations.

Communication between client applications and the service tier is done only through the use of DTO objects.

Interestingly DTO type objects may be present in your domain (but not called DTOs). You may have several different representations of customer for instance in order to optimise how much information flows across the network between the domain model and the database. The trade off is how simple you want to keep your domain versus how much control you want over database performance.

Assemblers

The assemblers are used by the service implementations to map between the conventions of DTO and Domain. For example, if you have a DTO contract for updating a customer, an assembler would take this DTO and map it to a valid customer domain object. This would then be passed to the repositories for serialisation.

Service Proxies

Hopefully this requires very little explanation, the service proxy is a client side implementation of the service implementation that maps to the communications channel and invokes the actual code on the service tier. Your client application works exclusively with the service proxies and the DTO's that it expects and returns.

Conclusion

This is just one way to build scalable N-tiered enterprise applications. Several of the concepts presented here are interchangeable with other methods - such as active record instead of domain + repository. Speaking from personal experience I have seen the above work very well on large scale implementations.

It is also worth mentioning some supporting concepts. To truly realise benefit from the above implementation, one would need to use interface driven development to allow any piece of the stack to be mocked and unit tested effectively. In addition, dependency injection can make your life easier as the scale of the system grows, automatically resolving dependencies between various objects between the tiers.

In the future I will post a simple example application with source code that uses all of the above techniques to demonstrate the implementation specifics. I hope this was worth writing and someone finds it useful.