I often use the sqlmetal tool to generate a L2SQL model from a database as follows;

sqlmetal /server:(local) /database:myDatabaseName

/views /functions /sprocs

/code:..\UI\Models\LinqSql\myDatabaseName_gen.cs

/language:C# /namespace:myProject.UI.Models.LinqSql

/context:myDatabaseContext /pluralize

This then generates all of my database structure out to LINQ to SQL classes, eg;

- [global::System.Data.Linq.Mapping.TableAttribute(Name="dbo.Bills")]

- public partial class Bill : INotifyPropertyChanging, INotifyPropertyChanged

However, with FxCop code analysis turned on (you DO have it turned on and set to error don’t you!?!?), this then results in a bunch of errors from the generated code. Such as CA2227, about making collections read only;

However, this is generated code, but sqlmetal by default doesn’t add the necessary attributes to exclude the code from code analysis (Adding the GeneratedCodeAttribute). You could manually edit the generated code each time you run sqlmetal in order to add this attribute, or manually maintain a file of partial classes with the attributes added there. I wanted something a bit more elegant though, so went off looking at T4 templates.

T4 templates allow us to write template based code that will generate the actual source code either when you save the template, when you manually “run custom tool” or by incorporating it into your build process. T4 templates use an ASP.NET type syntax, but slightly different. First of all, I heartily recommend you get the T4 template editor from these guys: http://t4-editor.tangible-engineering.com/ the free version is fine and gives you syntax hilighting and intellisense, which is missing in VS 2010.

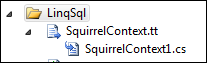

Simply adding a .TT file to your solution, then writing the template code results in a code file being generated for you, nested within the .TT file;

Within this template, I am able to get a handle on the containing project, inspect the code model and generate partial classes with the necessary GeneratedCodeAttribute applied. This results in the following class being generated.

using System.CodeDom.Compiler;

namespace myProject.UI.Models.LinqSql

{

[GeneratedCode("T4 LinqSqlFixer", "1.0")]

public partial class EntityA {}

[GeneratedCode("T4 LinqSqlFixer", "1.0")]

public partial class EntityB {}

[GeneratedCode("T4 LinqSqlFixer", "1.0")]

public partial class EntityC {}

[GeneratedCode("T4 LinqSqlFixer", "1.0")]

public partial class Bill {}

}

With this in place, code analysis now ignores the generated LINQ SQL code and I don’t have to muck around suppressing errors or, God forbid, turn off code analysis all together.

My full T4 template is as follows;

<#@ template hostSpecific="true" #>

<#@ assembly name="System.Core.dll" #>

<#@ assembly name="EnvDTE.dll" #>

<#@ import namespace="EnvDTE" #>

<#@ import namespace="System.Collections.Generic" #>

/*

This file was generated by a T4 Template. It attempts to add the GeneratedCode

attribute to any classes generated by sqlmetal - provided your sqlmetal classes

are in an isolated namespace.

The reason being, if the GeneratedCode attribute is not associated with the

generated classes, they are anaylsed by the code analysis tool.

Don't modify the generated .cs file directly, instead edit the .tt file.

*/

using System.CodeDom.Compiler;

<#

// HERE: Specify the namespaces to fix up

FixClassNameSpaces("myProject.UI.Models.LinqSql");

#>

<#+

void FixClassNameSpaces(string fixNamespace)

{

WriteLine("namespace {0}\r\n{{", fixNamespace);

string [] classes = GetClassesInNamespace(fixNamespace);

foreach( string className in classes )

{

WriteLine("\t[GeneratedCode(\"T4 LinqSqlFixer\", \"1.0\")]");

WriteLine("\tpublic partial class {0} {{}}\r\n", className);

}

WriteLine("}");

}

string [] GetClassesInNamespace(string ns)

{

List<string> results = new List<string>();

IServiceProvider host = (IServiceProvider) Host;

DTE dte = (DTE) host.GetService(typeof(DTE));

ProjectItem containingProject = dte.Solution.FindProjectItem(Host.TemplateFile);

Project project = containingProject.ContainingProject;

CodeNamespace nsElement = SearchForNamespaceElement(project.CodeModel.CodeElements, ns);

if( nsElement != null )

{

foreach( CodeElement code in nsElement.Members )

{

if( code.Kind == vsCMElement.vsCMElementClass )

{

CodeClass codeClass = (CodeClass) code;

results.Add( codeClass.Name );

}

}

}

return results.ToArray();

}

CodeNamespace SearchForNamespaceElement( CodeElements elements, string ns )

{

foreach( CodeElement code in elements )

{

if( code.Kind == vsCMElement.vsCMElementNamespace )

{

CodeNamespace codeNamespace = (CodeNamespace)code;

if( ns.Equals(code.FullName) )

{

// This is the namespace we're looking for

return codeNamespace;

}

else if( ns.StartsWith(code.FullName))

{

// This is going in the right direction, descend into the namespace

return SearchForNamespaceElement(codeNamespace.Members, ns);

}

}

}

return null;

}

#>