In this week’s SDLC related post, I’m going to try to explain how to structure a successful development project, one that when it goes live with users, puts happy smiles on their faces and, because it addresses their needs, massages away the pains they have been having. I’m talking of course here about bespoke development projects (internal development projects) rather than a software house writing the next version of “Micropple iWordPad 2015 Enterprise Edition SP2” – there are subtle yet important differences between the two types of project including;

- Software house

- Low cost/revenue per user head

- Focus on lower cost, high volume sales requires the product to be best in market

- High degree of spit and polish – can spend significant money on UI design to ensure product looks “the nuts”

- Ship dates fluid, dictated by feature completion.

- Bespoke

- Is best in market by definition as it addresses the businesses very specific needs.

- Requires spit and polish, but focus is on cost, usability, functionality and implementation time.

- A fine balance – lack of spit = user rejection, too much = missed functionality

- Very high cost per user head.

Spit and polish

There’s one contentious issue there – it almost seems like I’m saying that internal or bespoke software won’t be as high quality as a software product from a vendor – that’s not what I mean – quality must still be high, but in terms of going the extra mile to have the most appealing, beautiful and slick UI, unfortunately it is a practical truth that internal development can’t afford to put the same amount of time and money into this as a software house. Internal projects are faced with a trade off between a high level of spit and polish versus factors such as cost and timescales.

Consider a business that is spending £1.4 million on a custom system to, lets say, manage insurance claims and the user base is 200 claim handlers – then the cost per seat of that software is £7,000. Now consider the same business buying something off the shelf for £250,000 that meets around 70% of their requirements – they now have a cost per seat of £1250. The business understands however that the 30% of missing requirements means it won’t deliver on their key objectives and, because their business is so unique (they archive their claim documentation in shredded format for security and file it with a warehouse on the moon), they need to go down the bespoke route – the off the shelf package won’t cut the mustard.

Now they are spending an extra £5750 per user so the product damn well better be good and meet the moon archiving objectives, so you can’t say internal software doesn’t need to have high quality – it absolutely, positively must, but when faced with the choice of tweaking individual pixels in form layouts versus building feature Z (which will save them another £100k per year) the business will choose feature Z every time.

That is the reason I say internal software doesn’t require the same level of spit and polish – it still needs to be slick, but whereas the software house needs to spend the money to polish their UI to make sales, the internal software will often concentrate on features – those features will still be polished, just not to the same extent.

Ok, so that was a long winded way of saying this post is more applicable to bespoke developments rather than the software house – both should follow the same core principle – listen to the customer, but the bespoke team has one advantage – the customer is sat next to them, in the same building, or at least in the same company.

Engaging the customer

Failure in projects is often down to a lack of engagement with the users – they can feel that the new system is forced upon them without consultation and, to be frank, the users of your shiny new system are the ones with the highest understanding of their domain and often have the best suggestions for improvement. As such, it’s important to engage with the users of your system in a variety of ways to let them have their say in how the system should function. So how do we do this at the various stages of the project?

The kick off meeting

At the start of the project, I usually try to get as many users as possible together in one place and present the project to them. This early presentation is a great time to outline the vision for the project, what we’re hoping to achieve and also to set some expectations with them that you want them to be involved at various times. Describing the system and how initially we think it will work and what problems it will address generates an excitement about the project and gets them thinking about how they want to see the project progress.

Of course, at this point you’ve already done some up front estimating to get the client to agree to spend a million pounds with you and the business will have set some goals for when the system needs to be available so you already have constraints at this point, but it should be trivial to accommodate user’s suggestions and ideas, although it has to be said not all of them – you will always find someone with a whacky idea that has no mileage and so as a leader on a project you’re going to need some diplomacy skills!

At the end of the kick off meeting, everyone should understand the objectives of the project and know they are going to be expected to contribute in coming weeks and months. This is their opportunity to change things for the better and to help build a system that will help them do their jobs productively. Allow time at the end for questions and let people voice their concerns and ideas, but guide them into contributing the bulk of their discussions into workshops.

Iterating and workshops

I’ve mentioned in earlier posts that the detailed functional design work should be done an iteration ahead of the actual construction but to get the detail for this design, I like to use workshop sessions with small groups of users. There’s nothing particularly special about these sessions, you get a maximum of around 6 users together in a room, a whiteboard and someone to record important details.

With everyone gathered, we explain the purpose of the workshop and it’s scope (you might have users that work across different functional areas who are keen to get their ideas across) and ask them to describe their job, the processes they follow, where improvements could be made, and what the new application should look like. Time-box the session to around an hour (people’s attention will start to wane beyond this) and ultimately you should end up with whiteboards full of process charts, low fidelity UI designs and some idea of what data you need to capture and how it relates – this, combined with the output from several workshops, should then feed into the design documentation.

An important thing to note here, a successful workshop starts with just the material I’ve mentioned above – I’ve seen misguided analysts conduct workshops where the starting point has been a visio diagram or presentation of how they think the user works and the processes they follow and then proceed to present this to them rather than asking them to collaborate in the design. The problem with this is, users switch off, they come to the conclusion you already know everything you need to know (or think you do, you fool!) and generally won’t engage in a productive design session – if anything they will tear your presentation to pieces because it’s being pitched to them as the way to work rather than asking them to contribute.

Requirements and documentation

From the workshops and all the useful information gleaned from the users, the analyst will then work with the architect to draw up the functional specifications. These are simple use cases written for the user that describe how the system should operate including;

- Case properties

- Who does it.

- How the case is triggered.

- What conditions dictate the use case is executed.

- What the outcome should be.

- Summary

- A brief description of the purpose and flow of the use case.

- Normal path

- The path through the use case in bullet points

- What the user does, what controls they interact with, the data that is captured.

- The rules that are checked (conditional logic that can trigger an alternate path)

- Alternate paths

- The alternative paths through the use case raised from conditional logic in the normal path

This document should avoid technical implementation details or any specific technology and rather talk in the language of the user. These documents are then reviewed for accuracy by the users and a refinement cycle ensues until everyone agrees that the document describes how this piece of the system should work. These reviews can be done by simply sending out the document and gathering responses, but I find it productive to take the users from the workshops into a discussion where we quickly review and refine the document together (this often drives out any missed details that can be accommodated rapidly with agreement from the product owner and the users in the room).

Technical documentation

With the functional specification complete and agreed, the architect then takes over and transposes the functional details into a physical specification. I’m a firm believer that development is a creative vocation and so the developers should be left to be creative, but given enough information to be able to implement the application within a set of boundaries. As such, the technical specifications are usually done in the form of;

- Data or domain object model (depending on your preference of data first or objects first)

- Low fidelity interface mock ups

- Implementation notes.

This should be all the developer needs to be able to implement the required functionality – the specifics are up to them provided they are following the boundaries of the overall application architecture and framework – for instance your architecture may prescribe NHibernate as an ORM layer, hydrating and persisting aggregate root domain objects through a repository pattern, surfaced in the UI through MVVM using Prism and so on.

Active development and the show and tell

As the construction iteration starts, the functional and technical documentation is provided to the developers, but this is done in a formal way too. The idea being, as the development manager/architect/technical lead, your job is to not only design the system and solve the complex technical issues, but you are the interface between the business and the technical team, you should speak both languages (and for this reason it’s my opinion that the best leads are socially and business aware programmers themselves and should remain active and hands on with code).

At the start of the iteration, my teams hold an iteration planning meeting. At this meeting all the developers involved in the product gather with the analyst, product owner and you as the leader – we go through in absolute detail all of the functional and technical specifications so that everyone has a deep understanding of the requirements and how we are going to implement it – this often involves re-iterating earlier workshops on whiteboards along with discussing the documentation. The programmers are expected to contribute in detail to the ideas of how the implementation should progress, but also in the process of refining the estimates which they should own and take responsibility for.

This can take the form of planning poker or some of the other fun approaches to planning, but my preference is simply to work with the programmers to split the use case down into 1-4 hour individual tasks and estimate them themselves. This planning session gives us a very detailed view of what is involved in the implementation and allows us to compare this to our original high level estimates, the resources and the velocity we have available for that iteration. Where there is slack, we can pad out with more polishing and where there is overrun we can start to look at cutting nice to have functionality from the scope to make the amount of work for the iteration fit to the velocity we have available.

I should note here that the initial iteration plan would be done from the high level estimates and this means you need a good estimator pulling those high level estimates together. If the estimator isn’t a programmer herself, there could be large differences between the high level and detailed plans and this is going to cause you significant problems. In my last project, over 18 iterations, my margin of error between high and low level estimates was around 2% – if this was say, 20% and was optimistic, you’ve just essentially lost one member of a 5 man team due to your bad estimating.

From the iteration planning meeting (I usually allocate one entire day for this), the entire dev team is up to speed with the requirements, has an idea of how to implement everything, understands what tasks need to be done over the iteration and can get cracking. At this point, it’s tempting to become insular and just get on with the development whilst your users get on with their day job, and that is a mistake – the users need to feel engaged continually and you need them to have sight of the emerging product because in doing so, the users are more likely to realise, very early, when things might be going askew.

This review doesn’t need to be formal, doesn’t need to be meetings and presentations, it’s useful to get a handful of users each week for 10-15 minutes crowded around one of the programmers computers to look at how the system is progressing. These show and tell sessions can be extremely valuable in detecting and fixing those small issues that always crop up in dev projects, and getting visibility of them early.

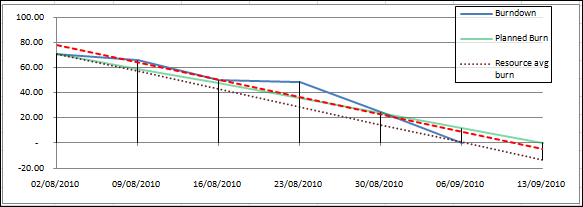

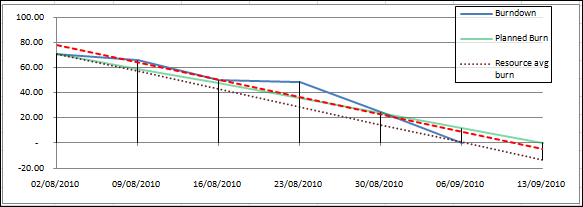

The final mechanism for engagement is the burn-down. People like to see progress, especially management and the people who’s necks are on the line for delivery on time and on budget. On a small team this can be very simple – the individual tasks identified during iteration planning can be put on post it notes and stuck to a whiteboard grid where rows represent the use case being developed (the stickies go in each row representing the use case they belong to) and the columns represent the state of the item – todo, in progress, for test, done (this is a typical scrum board).

At any time, anyone can take a look at the board and see a good visual indication about where the team is up to during that iteration. Each week, or even daily, the team lead should transpose current amount of work remaining onto a line chart showing the progress over time, the goal line and a trend line which will be indicative of whether the team will complete all work by the end of the iteration.

For larger teams, especially those distributed, you need some way of tracking this in a centralised tool using TFS work items, FogBugz, VersionOne or a similar product which will allow the team to carry out work in the same way and automatically produce the burn down. In these circumstances it’s a good idea to have a project wall for those interested in progress – a large LCD on the wall rotating between different views on the iteration – burn down, work completed etc etc.

Ending the iteration

At the end of the iteration, the use cases should be complete, tested and essentially ready for delivery. At this point, the work can either be released through to acceptance testing or, for larger applications where it doesn’t add value to deliver small chunks, be demonstrated back to users. Testing is an interesting point – developers should be taking responsibility for their work and testing it to ensure all of the paths through the use case work as prescribed, a dedicated test team can augment this significantly and for larger developments it’s an absolute necessity. In this case, the output from the iteration should feed into the next testing iteration and defects should be fixed before it is released to user acceptance testing – the testing cycle (test, fix, test, uat, fat etc) is a topic in itself and so I’ll leave it there for now.

Conclusion

In summary, engaging users and keeping them engaged is a critical aspect to the success of any development project, ignore users at your peril. I can testify that over numerous successful projects, keeping users engaged has been pivotal in successful delivery – the last thing you want to do is keep your project insular, deliver and then find that the finished product doesn’t match up to users expectations – that’s a sure fire way to ensure project failure.