Today I was asked a few questions about how garbage collection works, and it dawned on me that even though we’re on .NET 4.0 these days, there are a lot of .NET developers out there who don’t actually understand the mechanics of the garbage collector. So, even though this article is probably 10 years too late for a lot of people, I hope my rudimentary explanation will serve useful to those that don’t know about the concept or what it offers them. I’m going to cover;

- What garbage collection is.

- Some general information about the .Net garbage collector (GC)

- What actually happens when you allocate an object.

- The .NET garbage collection process

- What the different generations are and what purpose they serve

- How a GC gets triggered.

What is garbage collection?

Garbage collection provides an abstraction to the programmer that means they don’t need to worry about how memory is allocated and freed. In unmanaged code a programmer would need to allocate and free every byte their application used, using malloc() and free() (in C - new and delete in C++) and this has often been the source of numerous bugs in software, and they are often the hardest to track down.

Higher level languages like .NET take this burden away from the programmer by using garbage collection. The runtime takes care of tracking references to objects and understands when an object can be safely let go, so you can safely write;

void MyMethod() { SomeLargeObject obj = new SomeLargeObject(); }

Instead of;

void MyMethod() { SomeLargeObject obj = new SomeLargeObject; delete obj; }

In the latter example, if you forgot to delete the allocated object, the memory it consumed would essentially be lost, a typical memory leak. With the former example, the garbage collection understand the object no longer has any active references and it gets garbage collected and disposed of during a GC cycle.

.NET garbage collection

In .NET the garbage collector runs in one of two modes – synchronous and concurrent (often referred to as workstation and server). When the GC determines it needs to perform some clean up, synchronous mode will pause the executing process, quickly clean up, and then resume the executable, whilst in concurrent mode (the default) the clean up executes in the background without blocking your application.

It’s important to note that every application has a maximum amount of memory that it can allocate objects to – this memory is known as the heap, and the size available is dependent upon the O/S. A 32 bit O/S can only allocate a maximum of 2Gb by default (can be increased to 3Gb with a boot config), whilst on a 64bit O/S, this is significantly higher (8Tb from memory, but don’t quote me).

The GC doesn’t run continually, and this is an important observation to make, it runs whenever certain conditions are met, which I’ll discuss shortly, but the important thing to note is when an object is no longer referenced it may not necessarily be collected immediately – rather it will be collected the next time the GC runs. This can be problematic for some objects that perhaps hold locks on contentious resources. Holding a file handle for instance – holding that for too long would be a bad thing as it could be a while before the handle is released freeing the file for access to other processes or other areas of your code.

To cope with this scenario .NET has the IDisposable interface. By implementing IDisposable, you write code to release these contentious resources when the object is no longer needed, but before the object holding them is actually collected. By wrapping the use of an IDisposable object within a using statement the dispose functionality will be called when the reference goes out of scope (when it drops out of the using block), thereby releasing the file handle in our example and making it available to other processes. You can also invoke the dispose functionality of an IDisposable manually by calling Dispose on the object, but the using statement is less prone to forgetfulness!

The actual memory used by the object will still only be released later, when the GC runs.

What happens when you allocate an object

Before we look further at the GC, it’s important to look at what happens when you “new up” an object. It is allocated on the managed heap, of which there are two with subtle differences – the small object heap and the large object heap.

The small object heap

Small objects ( those requiring less than 85,000 bytes) will be allocated on the small object heap. When the GC runs and reclaims space on the small object heap it does one thing different that the large object heap doesn’t – it compacts memory. It removes the holes left in the heap by the now vacant memory – more on this shortly.

The large object heap

When you allocate objects larger than 85,000 bytes (these are bytes within the object itself, not the objects it references), these are allocated on the large object heap, which doesn’t compact memory because of performance limitations (moving large chunks of memory around is slower), so it leaves holes in the heap and this should be considered in the design of your code, again I’ll explain why shortly.

Taking out the trash

When a garbage collection is triggered it runs through 2 phases – a marking phase and a compacting phase.

The marking phase is all about marking which objects are still in use and which ones aren’t. It starts by walking through all known objects from something called GC roots. A GC root is an anchor point on which objects are tracked – a GC root is any local variable in the currently running method, a static variable or managed objects passed to unmanaged code – these roots become the starting point for garbage collection, objects with finalizers are also roots and these take 2 GC cycles to get rid of – one to release another to finalize (although this can be changed by calling GC.SuppressFinalize from your dispose code).

So, the GC examines all objects to see if they can still be reached by any other live object, starting with the GC roots. If an object can be reached it is marked as still live and on it goes until it’s determined the state of all the objects being tracked. Each object is checked only once, if a reference has already been walked, it stops – this allows circular references to exist in your graphs.

At the end of the mark phase, the memory that wasn’t marked live is released and the compacting phase begins. This compacting phase only occurs on the small object heap – it doesn’t do this on the large object heap as it would be performance intensive (moving large chunks of memory around). The compacting phase is all about closing the holes in the heap. Take the diagram below - Let say you allocate 3 objects on the small object heap, named Obj1 to Obj3 – they are allocated sequentially onto the back of the heap. When Obj2 is no longer used, it gets cleaned up by the garbage collector and the compaction phase re-organises the heap to remove the hole that it left;

This has a number of advantages – first, when allocating a block of memory it must be allocated in one contiguous block otherwise the request to allocate will fail with an OutOfMemoryException. If you had holes in your heap of say 850 bytes each, but totalling 1,000,000 bytes free, you could still only allocate 850 bytes as the largest object because that would be the largest piece of contiguous memory available, so reshuffling the small object heap like this keeps your memory lean and mean.

A side effect of this is that new allocations become extremely quick as they are always to the end of the heap, there’s no need to scan for the space, the space is just there all in one block, there’s either enough space or there’s not.

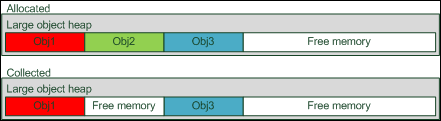

The large object heap is a different beast. If you recall, any object over 85000 bytes will be allocated from the large object heap, which gets collected but doesn’t do the compaction. Taking our example above of 3 objects going on the large object heap you would have;

Notice the hole. If an allocation comes along that fits into this hole, it would be used, but no compaction is done. This can have implications – say you were to allocate two lists in an interleaved manner (so allocate a new object into list A, then a new one into list B) with each element on the list being 10mb. You do this say 100 times, so you allocate a total of 10 x 100 x 2 = 2000mb. Let’s say that’s the total amount of memory free and you’ve now stuffed your memory full to capacity.

Releasing one of these lists will give you back 1,000mb, but the largest chunk of memory available on the large object heap will be 10mb because the memory is in sequence and it doesn’t get compacted. As such, any allocation to the large object heap from that point that is over 10mb will fail with an OutOfMemoryException. So you see, when working with large amounts of data this is a consideration to bear in mind. I’ll explore this more in a future post with some sample code that I’ve written to demonstrate the point.

I should point out now that this is a slight oversimplification – the GC doesn’t actually check and mark every object, the GC runs against different generations.

What are these generations all about?

The GC in .NET has the concept of generations. A generation is a queue of the objects on the heap that represents, I’m tempted to say age of the object but that isn’t strictly true, rather it indicates the stickiness of an object. Freshly allocated objects on the small object heap are given generation 0 (there are three generations in total, 0, 1 and 2) indicating they are fresh and are therefore the most likely to be cleaned up next. Think about it, fresh objects you usually allocate, do something to them and then they are finished with.

So new objects, on the small object heap are given an initial generation of 0. When the GC runs, it can do so against a generation – generation 0 will clear only generation 0 objects that are no longer referenced, generation 1 will do 0 and 1 and finally generation 2 (known as a full garbage collection) will clear generations 0,1 and 2. As an object survives a garbage collection, it is promoted to the next generation up.

What this means is the next time a generation 0 collection is run, it won’t bother checking the promoted objects because it’s quite likely that those objects are still referenced, after all they survived one or more GC cycles already and so those objects won’t be collected until a more aggressive GC is executed. Promotions only occur of course up to generation 2, so if a gen 2 object lives through a GC cycle, it will still be gen 2.

The only additional thing to mention with this is that large objects (on the large object heap) are allocated as generation 2 directly whilst small objects are allocated as generation 0. You cannot manually allocate a generation 1 object.

When does a garbage collection start?

And so, the last thing to talk about is how a GC is initiated. There are 3 mechanisms;

- Generation thresholds

- Manual collection

- Low memory conditions

Manual collection and low memory conditions

I’ll cover these two first as they are the simplest. If the hardware your code is running on is starting to run low on memory resources, your application will be notified and will automatically trigger a GC cycle – this is all handled for you, no code required, whilst the other mechanism is to manually call GC.Collect which will in turn perform a garbage collection cycle against the generation you passed as a parameter (plus any below it). This can be useful, but calling it is reasonably rare and you’re better off relying on the thresholds.

Thresholds

Each of the three generations has a GC threshold. When the heap is starting to fill with objects it may start to encroach on one of these thresholds and when it goes, the GC cycle for the generation in question will be triggered. This could result in object promotion to other generations which in turn may trigger further threshold collections. You don’t manage these thresholds directly, the runtime will dynamically tune the thresholds for you.

Summary

So that’s my hamfisted attempt at explaining the garbage collector in .NET, I hope it comes in handy for someone, it was certainly useful to refresh my own memory! I’ll post code in the next few days that demonstrates what I mentioned about the large object heap and fragmentation that will hopefully make some of this clearer.

No comments:

Post a Comment